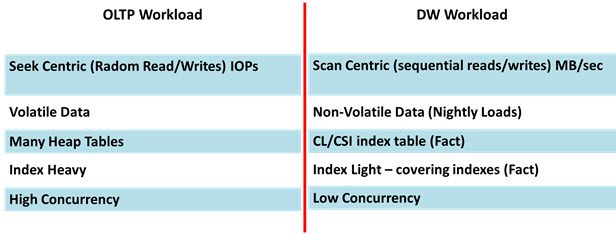

SQL Server Data Warehouse (DW) workloads differ in significant ways from traditional Online-Line-Transactional-Processing (OLTP) workloads in how they should be performanced tuned because of the different query patterns inherent in both designs. Below is a table which summarizes some of the most important differences.

An OLTP workload is characterized by seek centric operations that are best measured by IOPS (In-and-Out-Operations-Per-Second). The typical OLTP query only requires a few rows from several tables (3rd normal form) to be returned from disk. An OLTP system’s performance is determined by how quickly it can seek those few rows and return them back to the application. However, a typical data warehouse query will return a lot of historical data from disk to be aggregated on the CPU. For example to get the Total Sales Amount for an entire year all of the sales transactions for that year will have to move from disk onto the CPU to be aggregated to the yearly level. This large data movement requires scan centric operations and is best measure by throughput (MB/sec).

End users and applications update the OLTP system through DML (Data Manipulation Language: updates, inserts, and deletes) operations continuously throughout the day so that data is described as being volatile while a data warehouse typical only preforms the bulk of its DML operations during a daily ETL process.

In order for an OLTP system to quickly locate the rows that it requires many indexes will need to be created, however data warehouse tables do not require nearly as many indexes. In the example of yearly Total Sales Amount an index will help located all the transactions for the entire year but will not be able to improve the time that it takes to move all of those transaction from disk to the CPU. Therefore, only relatively few indexes are required.

OLTP systems are also characterized by high concurrency because many users or applications can be querying the system at the same time. Data warehouses, however, normally only support a few users who are typically characterized as analysts.

Measuring Data Warehouse Workloads

Due to the fact that data warehouse workloads differ significantly for that of OLTP systems a tool capable of simulating data warehouse workloads will need to be used to performance tune SQL Server 2012 or SQL Server 2008. The best tool for this scenario is SQLIO. SQLIO is capable of measuring SQL Server Data Warehouse performance in MB/second under a variety of sequential read and write scenarios to determine if the storage-sub system of a SQL Data Warehouse is tuned for optimal perfromance of data warehouse workloads.

Below is an example of the results of SQLIO represented by a line chart:

Since Sequential Reads (green) and Sequential Writes (blue) both perform as good as or better than their random counterparts then this storage system is tunned for SQL Server Data Warehouse workloads.

From the chart it can also be determined that this SQL Server Data Warehouse Server has a maximum Sequential Read throughput of about 1,500 MB/sec. and a maximum Sequential Write Throughput of about 1,200 MB/sec.